7 Proven Models for Sample Evaluation Questions in Corporate Training

In corporate training, the right questions separate impactful learning from expensive exercises. Generic feedback forms that ask "Did you enjoy the session?" tell you little about actual knowledge retention, tangible behavior change, or measurable business results. To truly gauge the ROI of your learning and development initiatives, you need a more structured and strategic approach. Superficial assessments won't cut it; for a deeper dive into effective assessment strategies, a solid grasp of understanding formative versus summative feedback is essential for building a robust evaluation framework.

This guide provides a deep dive into seven powerful evaluation models, complete with sample evaluation questions you can directly adapt for your corporate training programs. We will move beyond simple satisfaction surveys to uncover actionable insights that drive employee performance and prove the definitive value of your training. We'll explore how to structure your questions to measure everything from initial reactions to long-term business impact.

Furthermore, we'll highlight how interactive video platforms like Mindstamp can embed these questions directly into your training content. This allows you to capture crucial data in real-time, boost learner engagement, and turn passive video consumption into an active, measurable learning experience. Get ready to ask better questions and get better results.

1. Kirkpatrick's Four-Level Evaluation Model Questions

The Kirkpatrick Model is a globally recognized framework for evaluating the effectiveness of corporate training programs. Developed by Donald Kirkpatrick in the 1950s, this model provides a systematic approach to assessing a program's value by breaking the evaluation process into four distinct levels. This structure allows learning and development (L&D) professionals to gather comprehensive feedback, from immediate learner satisfaction to long-term business impact.

This method is ideal for corporate training programs where demonstrating return on investment (ROI) is crucial. By collecting data at each level, you can build a compelling case for the training's success and identify specific areas for improvement. For instance, a sales team's new product training can be evaluated not just on whether they liked the training, but whether they learned the product specs, applied new sales techniques, and ultimately increased sales figures.

The Four Levels of Evaluation

The model’s power lies in its tiered structure, where each level builds upon the previous one, providing a progressively deeper understanding of the training's impact.

- Level 1: Reaction. This level measures how participants felt about the training. Did they find it engaging, relevant, and well-presented? Questions here focus on satisfaction.

- Level 2: Learning. Here, you assess the increase in knowledge, skills, or changes in attitude. Pre- and post-assessments are common tools at this stage.

- Level 3: Behavior. This crucial level examines whether participants are applying what they learned back on the job. It measures the transfer of knowledge to workplace performance.

- Level 4: Results. The final level connects the training to tangible business outcomes. This could include increased productivity, higher profits, reduced costs, or improved quality.

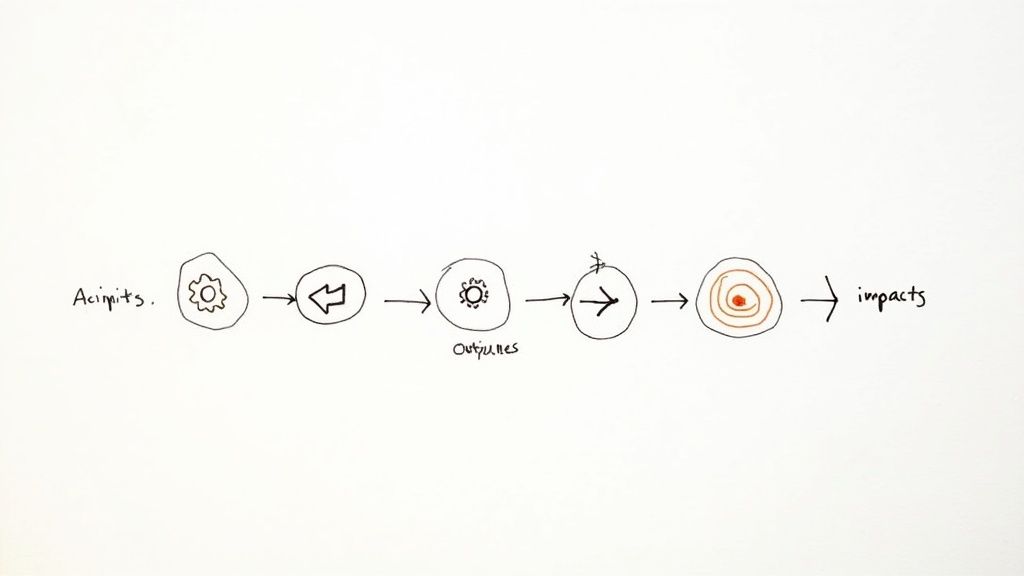

The following infographic illustrates the progressive flow from initial reaction to applied behavior, which are the foundational stages for measuring organizational results.

This process flow highlights how each evaluation level logically builds on the last, creating a comprehensive picture of training effectiveness from participant satisfaction to tangible workplace application.

Sample Evaluation Questions for Each Level

Here are some sample evaluation questions you can adapt for your corporate programs:

- (Level 1 - Reaction): "On a scale of 1-5, how relevant was this training to your daily tasks?"

- (Level 2 - Learning): "Describe the three key steps for completing the new compliance process."

- (Level 3 - Behavior): "Provide an example of how you have used the negotiation techniques from the workshop in the past month."

- (Level 4 - Results): "What has been the impact of the new safety protocols on the number of workplace incidents this quarter?"

For L&D managers, these sample evaluation questions are more than just a checklist; they are diagnostic tools. By using a tiered approach, you can pinpoint exactly where a program is succeeding or falling short. To explore this and other frameworks in greater detail, learn more about different training evaluation methods on mindstamp.com.

2. CIPP Model Evaluation Questions (Context, Input, Process, Product)

The CIPP Model is a comprehensive evaluation framework designed to guide decision-making and assess a program's overall merit and worth. Developed by Daniel Stufflebeam, it breaks evaluation into four key aspects: Context, Input, Process, and Product. This holistic approach helps organizations systematically improve, plan, and justify their training initiatives by looking beyond simple outcomes.

This method is particularly valuable for complex, long-term corporate training programs where understanding the "why" and "how" is as important as the final results. For example, when launching a company-wide leadership development program, the CIPP model allows L&D professionals to evaluate not only if leaders improved their skills, but also if the program addressed the right organizational needs, used the best resources, and was implemented effectively.

This structured approach ensures that every phase of a program is scrutinized, providing a 360-degree view that supports continuous improvement and strategic alignment.

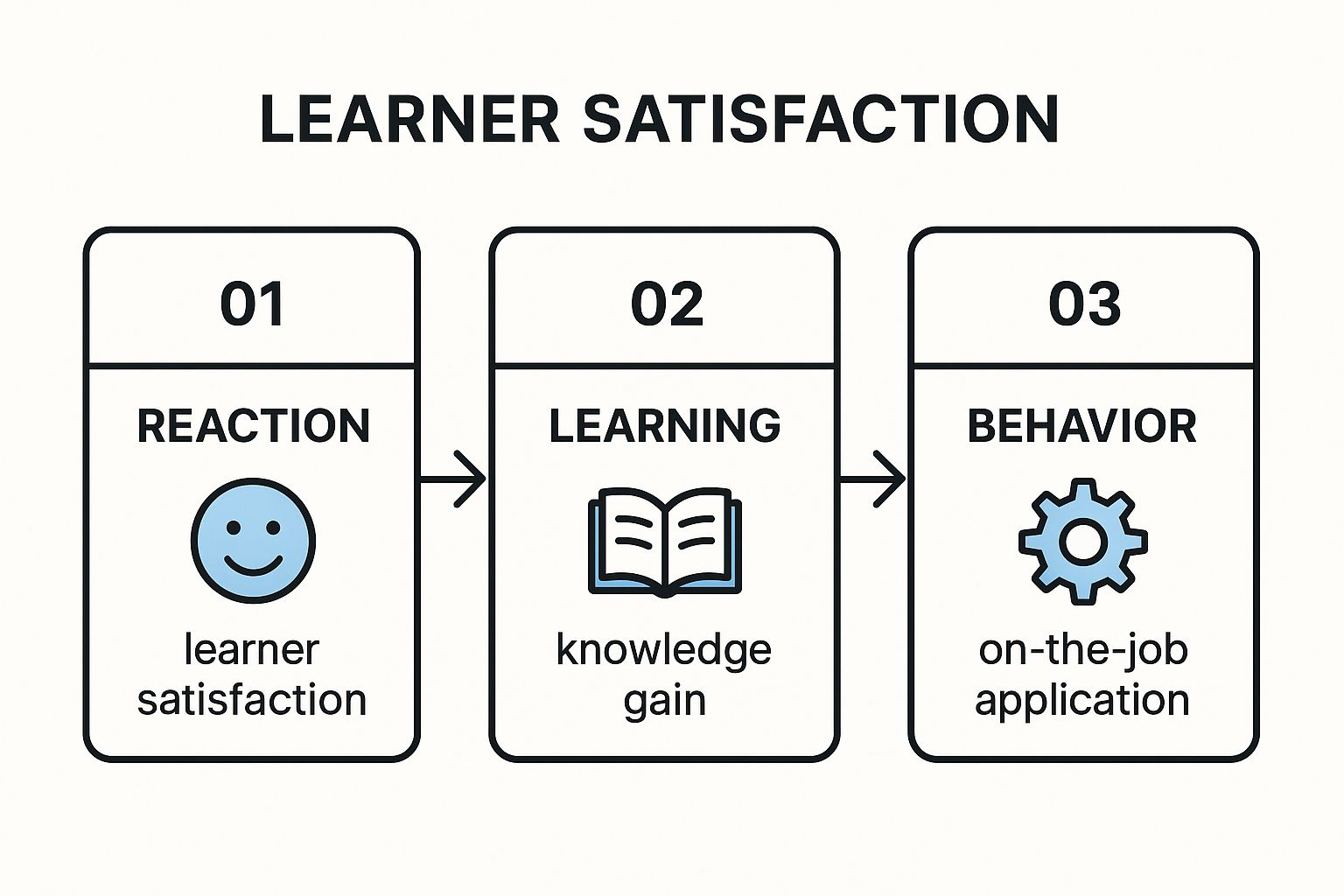

The Four Dimensions of Evaluation

The CIPP model's strength is its cyclical and comprehensive nature, providing timely feedback at every stage of the program lifecycle.

- Context. This dimension assesses the needs, challenges, and opportunities that justify the program. It answers the question, "What do we need to do?"

- Input. Here, you evaluate the resources, strategies, and plans designed to meet the identified needs. It asks, "How should we do it?"

- Process. This aspect focuses on monitoring program implementation. It checks for discrepancies between the plan and its actual execution.

- Product. Finally, this dimension measures the outcomes and overall effectiveness of the program, both intended and unintended.

Sample Evaluation Questions for Each Dimension

Here are some sample evaluation questions you can adapt for your training programs:

- (Context): "What are the most critical skill gaps our new onboarding program needs to address?"

- (Input): "Are the selected training modules and interactive video resources the most effective way to achieve our learning objectives?"

- (Process): "Were the training sessions delivered as planned, and did participants actively engage with the material?"

- (Product): "To what extent has the new sales training program contributed to the Q3 increase in regional sales?"

For training managers, using these sample evaluation questions from the CIPP model provides a robust framework for both formative (improving the program as it runs) and summative (judging its final worth) evaluation. It moves beyond just measuring results to understanding the entire ecosystem of a training initiative. To delve deeper into this and other structured evaluation techniques, explore the resources available from the Western Michigan University Evaluation Center.

3. Logic Model Evaluation Questions

The Logic Model is a systematic, visual way to represent the theory behind a training program. It illustrates the logical connections between the resources you invest (Inputs), the actions you take (Activities), what you produce (Outputs), and the changes or benefits that result (Outcomes and Impacts). This framework helps stakeholders understand the program's causal chain from start to finish, which is essential for proving ROI.

This method is particularly powerful for complex, multi-stage training programs where it's essential to understand not just if a program worked, but how and why. For instance, a leadership development program can use a logic model to trace the path from providing coaching sessions (activities) to managers demonstrating new leadership skills (outcomes), ultimately leading to improved team retention and productivity (impacts).

The Core Components of a Logic Model

The model’s strength is in breaking down a program into its essential components, making it easier to evaluate each link in the chain.

- Inputs: The resources needed for the program, such as budget, L&D staff, training platforms like Mindstamp, and course materials.

- Activities: The actions, events, and processes that constitute the program, like workshops, video training, or coaching.

- Outputs: The direct, tangible products of program activities. For training, this could be the number of employees trained or the number of interactive video modules completed.

- Outcomes: The short-term and medium-term changes that result from the program, such as new knowledge, improved skills, or changed attitudes.

- Impact: The long-term, ultimate intended change, often linked to broader organizational goals like increased revenue, improved safety records, or a more positive workplace culture.

This flow provides a clear roadmap for your program, showing how day-to-day training activities are expected to lead to significant, long-term business results.

Sample Evaluation Questions for Each Component

Here are some sample evaluation questions you can adapt for your programs based on the logic model:

- (Inputs): "Did we have sufficient budget and staffing to execute the planned training activities effectively?"

- (Activities): "Were the training workshops and online modules delivered as scheduled and to the intended audience?"

- (Outputs): "How many participants successfully completed the compliance training module by the deadline?"

- (Outcomes): "To what extent have employees demonstrated improved data analysis skills since completing the course?"

- (Impact): "Has the new sales enablement program contributed to a measurable increase in quarterly sales revenue?"

For L&D managers, these sample evaluation questions are crucial for diagnosing the health of an initiative at every stage. They help pinpoint where the program's theory is holding true and where it might be breaking down. To effectively measure the outcomes and impact of your training, it is important to integrate high-quality evaluation into your design; learn more about the role of assessment in learning on mindstamp.com.

4. Most Significant Change (MSC) Technique Questions

The Most Significant Change (MSC) technique is a participatory, qualitative evaluation method that moves beyond predefined metrics to uncover unexpected outcomes. Developed by Rick Davies, this approach involves collecting and systematically analyzing personal stories of change from program participants. It focuses on identifying what employees and managers value most, providing deep insights into the human impact of a training initiative.

This method is particularly powerful for complex programs where outcomes are not easily quantifiable, such as leadership development or cultural change initiatives. For example, instead of just asking if a manager feels more confident after a training program, MSC prompts them to share a specific story about a time they applied a new leadership skill and the impact it had. This narrative approach captures rich, contextual data that traditional surveys often miss.

The Story-Based Evaluation Process

MSC's strength lies in its systematic process of gathering and filtering stories to identify the most impactful changes.

- Story Collection: Participants are asked a simple, open-ended question like, "Looking back over the last three months, what do you think was the most significant change resulting from this training?"

- Hierarchical Filtering: Stories are shared and discussed within groups of stakeholders (e.g., participant teams, managers, senior leaders). Each group selects the story they believe represents the most significant change.

- Selection and Discussion: This selection process is repeated at higher levels of the organization. Crucially, the reasons for selecting each story are documented, revealing what the organization truly values.

- Feedback and Verification: The results and selected stories are shared back with participants and stakeholders to verify the findings and encourage further learning.

This process transforms evaluation from a top-down measurement exercise into a collaborative dialogue about progress and values. It builds engagement and provides a platform for participants to voice what truly matters to them.

Sample Evaluation Questions for MSC

The core of MSC is its open-ended questioning. Here are some sample evaluation questions to start the story-gathering process:

- "Since participating in the [Program Name], what is the most significant change you have experienced in your work?"

- "Can you share a specific story about a time you applied what you learned and the difference it made?"

- "Describe a change in your team's behavior or performance that you attribute to this initiative."

- (During the filtering process): "Why did you choose this particular story as the most significant?"

For L&D professionals, these questions are not just for collecting data; they are tools for fostering reflection and organizational learning. By analyzing the types of stories that are valued, you can gain a deeper understanding of your organization's culture and priorities. Learn more about applying qualitative methods by exploring different training evaluation models with Better Evaluation.

5. Outcome Harvesting Evaluation Questions

Outcome Harvesting is a participatory evaluation method that flips the traditional process on its head. Instead of measuring progress against predetermined objectives, it collects evidence of what has changed (the "outcomes") and then works backward to determine whether and how a training program contributed to these changes. This approach is particularly valuable for complex initiatives where outcomes are not easily predictable, such as leadership development or culture change programs.

This method is ideal for evaluating training programs that aim to influence complex behaviors or organizational systems. For example, a program designed to foster a more innovative and collaborative culture might not have simple, linear metrics. Outcome Harvesting allows L&D professionals to identify unexpected positive (or negative) changes in team dynamics, cross-departmental projects, or problem-solving approaches, and then trace those back to the training.

The Six Steps of Outcome Harvesting

The power of this method lies in its flexible, evidence-based approach that engages stakeholders directly in making sense of the results. The process is iterative and focuses on learning over simple measurement.

- Step 1: Design. The evaluation is designed with users to determine its scope and focus. Key questions are formulated to guide the data collection.

- Step 2: Collect Data & Formulate Outcomes. Information is gathered from diverse sources to draft outcome descriptions detailing what changed, for whom, and when.

- Step 3: Engage Stakeholders. The drafted outcomes are reviewed and enhanced with input from those knowledgeable about the program.

- Step 4: Substantiate. The credibility of the outcomes is verified by independent sources who are well-positioned to know if the changes occurred and the program's contribution.

- Step 5: Analyze & Interpret. The verified outcomes are analyzed to answer the core evaluation questions, identifying patterns and drawing conclusions.

- Step 6: Support Use. The findings are presented in a way that is useful for management and stakeholders to make informed decisions.

This collaborative process ensures that the evaluation is grounded in real-world changes and provides a rich narrative of the program's influence beyond what standard metrics can capture.

Sample Evaluation Questions for Outcome Harvesting

The questions in Outcome Harvesting are broad and exploratory, designed to uncover change rather than measure compliance.

- (To Participants): "Since you completed the leadership training, what have you done differently in your role? What changes in your team's behavior, if any, have you observed?"

- (To Managers): "What changes have you noticed in the behavior or performance of team members who participated in the new project management workshop?"

- (To Stakeholders): "Describe a significant change (positive or negative) in our organization's approach to customer service in the last six months. What or who do you believe influenced this change?"

- (For Substantiation): "We've been told that [specific outcome description] occurred. From your perspective, is this description accurate? What role, if any, do you think our training program played in this change?"

For L&D managers evaluating complex skills training, these sample evaluation questions help build a credible and nuanced story of impact. This approach moves beyond simple "pass/fail" metrics to understand the real-world influence of learning interventions. To learn more about this user-focused approach, explore resources from its primary developers at outcomeharvesting.net.

6. Developmental Evaluation Questions

Developmental Evaluation (DE) is a dynamic approach used to evaluate programs and initiatives in complex or uncertain environments. Popularized by Michael Quinn Patton, DE supports adaptive management by providing real-time feedback to guide ongoing development and innovation, rather than judging a finished product against predefined goals. It is designed for situations where the path forward is not clear and the outcomes are expected to emerge over time.

This method is particularly powerful for corporate initiatives like organizational transformation or the launch of a new, innovative training program where the objectives may evolve. For instance, if a company is rolling out a new leadership development program designed to foster a culture of innovation, DE helps the team learn and adapt the program as it unfolds, responding to what is and isn't working in real-time. It's less about a final grade and more about continuous, informed improvement.

The Core Principles of Developmental Evaluation

DE’s strength lies in its adaptive and embedded nature. An evaluator becomes part of the project team, facilitating a continuous cycle of data collection, reflection, and adaptation.

- Real-Time Feedback: DE focuses on providing immediate, actionable feedback to inform decisions as they are being made, not after the fact. This supports agile program management.

- Learning and Adaptation: The primary goal is to help the team learn and adjust. Questions are framed to explore emerging patterns, challenges, and opportunities.

- Complexity and Emergence: It acknowledges that in complex systems, outcomes cannot be fully predicted. Evaluation helps navigate this uncertainty and make sense of what emerges.

- Innovation Support: It is ideal for innovative projects where the "what" and "how" are being discovered along the way, making it a perfect fit for piloting new training technologies.

This approach transforms evaluation from a final judgment into an integral part of the innovation and development process itself, embedding learning directly into the project's workflow.

Sample Evaluation Questions for Development

The following are sample evaluation questions designed to guide learning and adaptation:

- (Exploring the Situation): "What are we observing in the pilot group's response to the new interactive training module?"

- (Tracking Change): "How has our understanding of the 'problem' we are trying to solve changed since we started this initiative?"

- (Guiding Adaptation): "Based on this week's feedback, what is one adjustment we should make to the program's content or delivery for next week?"

- (Identifying Emergence): "What unintended consequences, both positive and negative, are arising from this organizational change?"

These questions act as catalysts for team discussion and strategic pivots. They help L&D teams stay responsive and ensure their programs remain relevant and effective in changing circumstances. To enhance the real-time feedback loop, teams can discover how to build more responsive learning experiences with interactive online training on mindstamp.com.

7. Utilization-Focused Evaluation Questions

Utilization-Focused Evaluation (U-FE) is an approach that prioritizes practical use over rigid methodology. Popularized by Michael Quinn Patton, this framework ensures that the evaluation process is designed from the outset to produce findings that are relevant, timely, and directly useful to intended stakeholders. The core principle is simple: evaluations should be judged by their utility and actual use.

This method is perfect for corporate training initiatives where the goal is immediate program improvement or strategic decision-making. Instead of just gathering data, U-FE forces learning and development (L&D) professionals to first ask, "Who will use this information, and for what specific purpose?" For example, when evaluating a new leadership development program, the focus isn't just on whether leaders learned new skills, but on providing the executive team with the exact data they need to decide whether to expand, modify, or discontinue the program.

Key Principles of Utilization-Focused Evaluation

The power of U-FE lies in its collaborative and action-oriented approach. It directly involves stakeholders in the process, ensuring the final report doesn't just sit on a shelf.

- Identify Primary Intended Users: Before crafting any questions, pinpoint the specific people (e.g., department heads, project managers) who will act on the evaluation's findings.

- Clarify Intended Uses: Work with these users to understand the decisions they need to make. The evaluation's purpose should be clearly linked to these decisions.

- Focus on Actionable Questions: Develop questions that yield actionable answers rather than just interesting data. The goal is to facilitate decision-making, not just to accumulate information.

- Plan for Utilization: The process includes planning how the findings will be shared and integrated into organizational workflows to ensure they lead to tangible change.

This approach ensures that every piece of data collected serves a direct purpose, making the evaluation process highly efficient and impactful. It shifts the focus from "What should we measure?" to "What do our decision-makers need to know to take effective action?"

Sample Evaluation Questions for a U-FE Approach

Here are some sample evaluation questions you can adapt, framed with a focus on utility:

- (For Program Improvement): "Based on your experience, what are the top two most and least effective modules in this training, and what specific changes would make the least effective ones more relevant to your job?"

- (For Policy Decision Support): "To what extent has the new remote work training enabled your team to meet productivity targets? What data would convince leadership to invest further in this policy?"

- (For Resource Allocation): "Which of the new software skills learned in the training have you used most frequently in the past 30 days, and how much time has it saved you?"

- (For Future Planning): "What additional skills or knowledge do you need to effectively handle the upcoming project pipeline that was not covered in this program?"

For L&D managers, these sample evaluation questions are designed to bridge the gap between data collection and concrete action. By starting with the end user in mind, you guarantee that your evaluation efforts will provide genuine value and drive meaningful organizational improvement. To learn more about this and other practical evaluation frameworks, see the resources available from the American Evaluation Association.

Sample Evaluation Questions Comparison

Transforming Your Training From a Cost Center to a Value Driver

The journey from a simple training initiative to a measurable, value-driving organizational asset is paved with data. As we've explored, the right sample evaluation questions, drawn from proven models like Kirkpatrick's Four Levels or the CIPP framework, provide the raw material for this transformation. They are more than just a checklist; they are strategic tools for uncovering what works, what doesn’t, and why.

Moving beyond the "what" to the "how" is where true impact is made. Each framework, from the ROI-focused Logic Model to the narrative-driven Most Significant Change technique, offers a distinct lens. The key is to select the lens that aligns with your specific goals, whether it's quantifying skill acquisition, capturing behavioral shifts, or understanding the nuanced context of your learning environment.

From Post-Mortem to Real-Time Insight

Traditionally, evaluation has been a retrospective activity, a post-mortem conducted weeks after a program concludes. This approach often results in delayed insights and missed opportunities for immediate course correction. The real power lies in shifting evaluation from a final step to an integrated, continuous process.

By weaving your chosen evaluation questions directly into the learning experience using a platform like Mindstamp, you create a powerful feedback loop. This not only keeps learners engaged but also provides you with a constant stream of actionable data. You can identify comprehension gaps as they happen, not after the final quiz, and adjust your content accordingly.

Actionable Takeaways for Immediate Implementation

To start leveraging the power of evaluation, focus on these critical next steps:

- Align Your Model to Your Goal: Don't just pick a familiar framework. If your primary goal is to prove business impact, lean on Kirkpatrick or a Logic Model. If you need to adapt a new program in a rapidly changing environment, Developmental Evaluation is your ally.

- Integrate, Don't Isolate: Stop treating evaluation as a separate survey. Use tools like Mindstamp to embed your questions directly within your interactive video training modules. This contextualizes the questions and dramatically increases response rates.

- Start Small and Iterate: You don't need to implement a comprehensive, multi-level evaluation for every single training. Begin by adding simple comprehension checks (Level 2) and confidence-based questions to a single module. Use the data to make one small improvement, demonstrate the value, and expand from there.

Mastering the art of asking insightful sample evaluation questions is what separates a training department that consumes resources from one that demonstrably creates value. By turning feedback into a core component of the learning journey, you gain the clarity needed to optimize your programs, prove their worth to stakeholders, and ultimately, drive meaningful organizational performance. This strategic approach elevates your role, solidifying training and development as an indispensable engine for business growth.

Ready to turn your passive training videos into active, data-gathering powerhouses? Mindstamp makes it simple to embed any of the evaluation questions discussed in this article directly into your video content. Start your free trial today and see firsthand how interactive video can transform your training evaluation process.

Get Started Now

Mindstamp is easy to use, incredibly capable, and supported by an amazing team. Join us!

Try Mindstamp Free