7 Essential Training Evaluation Methods for 2025

In today's competitive landscape, proving the value of learning and development (L&D) is no longer a 'nice-to-have' but a business imperative. While many organizations still rely on simple satisfaction surveys, often called 'happy sheets', a truly effective L&D strategy requires a much deeper level of analysis. The right training evaluation methods do not just tell you if employees enjoyed a course; they reveal what was learned, how behaviors changed on the job, and most importantly, the tangible impact on business results.

Moving beyond surface-level feedback to a structured evaluation framework is the key to optimizing training budgets, aligning learning with strategic goals, and demonstrating a clear return on investment (ROI). For L&D managers, sales leaders, and marketing professionals alike, understanding which models to use and when is crucial for success. Choosing the correct framework ensures your programs are not just well-received but are actively driving performance and contributing to the bottom line.

This guide provides a comprehensive roundup of the seven most influential training evaluation methods used by leading organizations. We will dissect each model, exploring its core principles, practical applications, and the specific pros and cons to consider. For each method, from the foundational Kirkpatrick Model to the business-focused Phillips ROI framework and the narrative-driven Success Case Method, you will find actionable tips to help you select and implement the best approach for your specific needs. The goal is to equip you with the knowledge to move past simple metrics and start measuring the real, lasting impact of your training initiatives.

1. Kirkpatrick's Four-Level Model

The Kirkpatrick Model is arguably the most recognized and widely used framework among training evaluation methods. Developed by Donald Kirkpatrick in the 1950s, it provides a four-level strategy for assessing the effectiveness of training programs in a structured, sequential way. The core idea is that each level builds on the one before it, creating a chain of evidence from initial learner satisfaction to tangible business results.

This model's enduring popularity stems from its logical, cause-and-effect structure. It moves beyond simply asking if participants enjoyed the training and pushes L&D professionals to measure true behavioral change and its impact on the organization's bottom line.

How the Four Levels Work

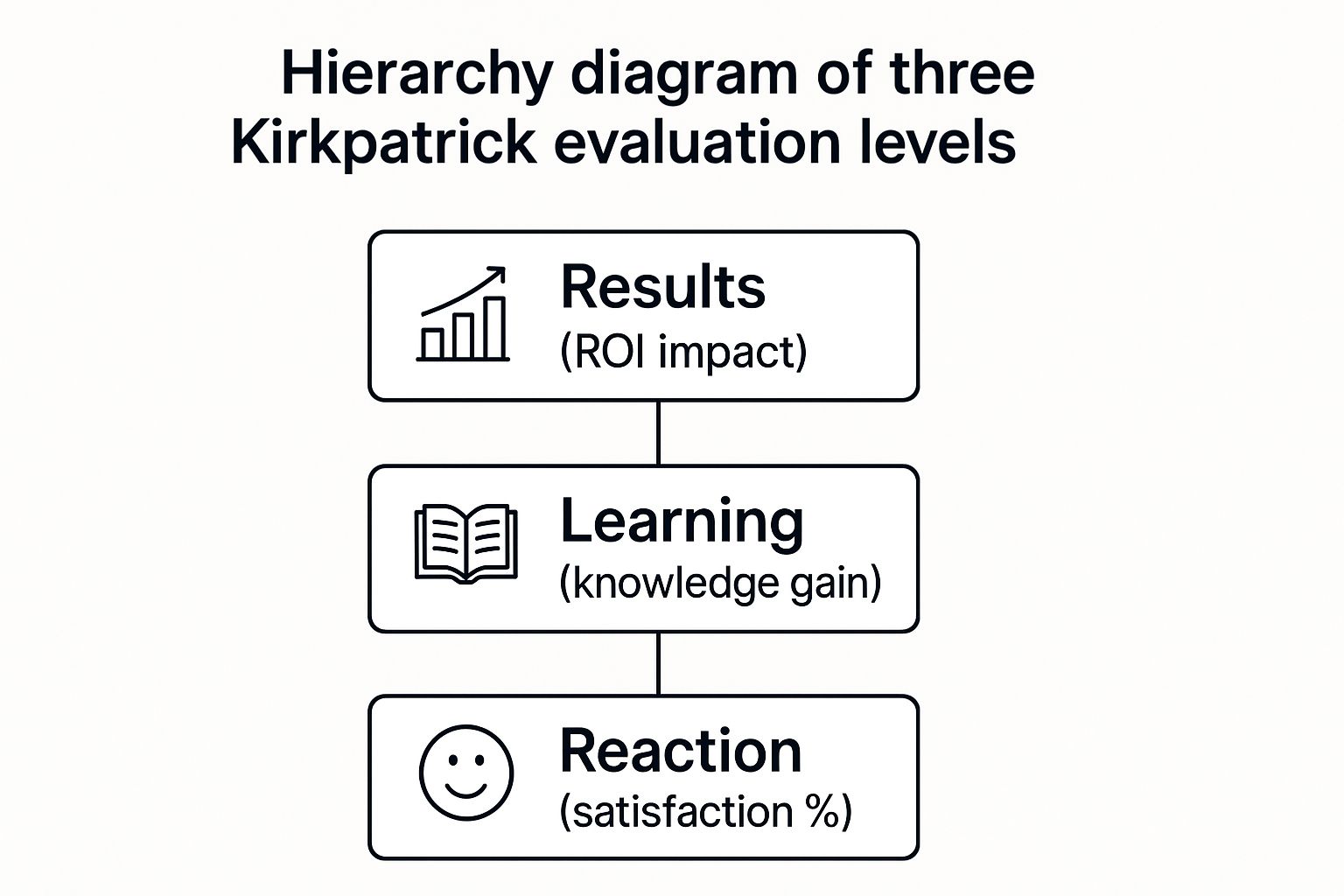

The model’s hierarchy provides a clear path for evaluation. Each level answers a more critical question than the last, creating a comprehensive picture of training effectiveness.

- Level 1: Reaction. This level gauges how participants felt about the training. Did they find it engaging, relevant, and well-presented? Measurement is often done through post-training surveys or "smile sheets."

- Level 2: Learning. Here, the focus shifts to quantifying what participants learned. This involves measuring the increase in knowledge, skills, and confidence. Assessments can include pre- and post-training tests, practical demonstrations, or role-playing exercises. For instance, when evaluating a language course, using practical tools like online language proficiency tests can provide concrete data on skill improvement.

- Level 3: Behavior. This crucial level assesses whether participants are applying what they learned back on the job. It measures the transfer of knowledge into workplace performance. Data is typically collected through manager observations, self-assessments, peer reviews, or performance metrics, often weeks or months after the training.

- Level 4: Results. The final level connects the training to business outcomes. Did the training program impact key performance indicators (KPIs) like productivity, sales, quality, employee retention, or cost reduction? This is the most challenging level but provides the strongest evidence of a program's value.

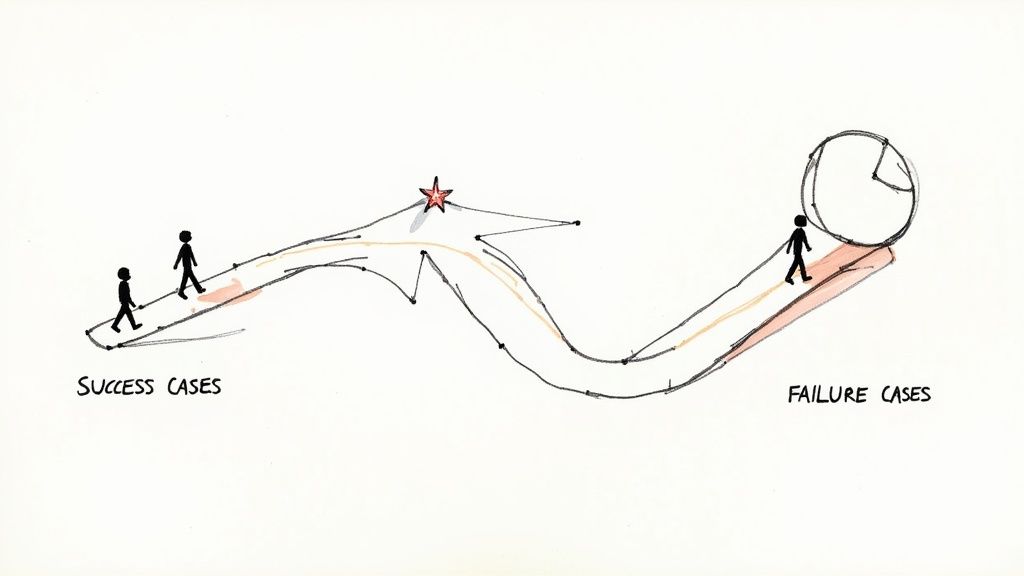

This infographic visualizes the hierarchical connection between the foundational levels of the Kirkpatrick model and the ultimate business impact.

The visualization illustrates that while participant satisfaction is the base, true success is measured by the learning and business results that build upon it.

When to Use This Model

The Kirkpatrick Model is ideal for organizations that need a comprehensive, multi-layered view of training impact. It's particularly effective for evaluating high-stakes or resource-intensive programs where demonstrating a clear link to business results is essential for justification and future investment. Companies like IBM and McDonald's use it to validate their extensive leadership and technical training initiatives. Learn more about how to measure training effectiveness with this model on mindstamp.com.

2. Phillips ROI Model (Five-Level Framework)

The Phillips ROI Model, developed by Dr. Jack Phillips, builds directly upon the Kirkpatrick framework by adding a critical fifth level: Return on Investment (ROI). This model addresses a common challenge for L&D professionals, which is translating training outcomes into concrete financial terms. It provides a systematic process not just for measuring business impact, but for isolating the specific effects of the training from other influencing factors.

This extension makes it one of the most robust training evaluation methods for organizations that need to justify training budgets and demonstrate clear financial value to senior leadership. It moves the conversation from "Did our people learn?" to "What was the financial return of our investment in learning?"

How the Five Levels Work

The model adopts Kirkpatrick’s first four levels and adds a final layer of financial analysis. This creates a complete story from initial learner reaction to tangible, monetized results.

- Level 1: Reaction and Planned Action. This is identical to Kirkpatrick's first level, gauging participant satisfaction and perceived value. It also asks about their intention to apply the learning.

- Level 2: Learning. This level measures the degree to which participants acquired the intended knowledge, skills, and attitudes from the training program, often through tests or skill demonstrations.

- Level 3: Application and Implementation. Similar to Kirkpatrick's Behavior level, this assesses whether participants are applying their new skills on the job. It measures the transfer of learning into workplace performance.

- Level 4: Business Impact. Here, the model focuses on the consequences of the applied learning on key business metrics. It involves collecting data on improvements in areas like productivity, quality, sales, and efficiency. A key step is isolating the effects of training from other factors (e.g., a new marketing campaign or economic changes) to pinpoint the program's true impact.

- Level 5: Return on Investment (ROI). This is the ultimate level of evaluation. The net monetary benefits from the program (Level 4 data converted to money) are compared to the total costs of the program. The result is expressed as a percentage or benefit-cost ratio, such as the famous case where Motorola demonstrated a $30 ROI for every $1 spent on training.

The framework also encourages accounting for intangible benefits, like improved teamwork or higher job satisfaction, which are noted even if not converted to monetary value.

When to Use This Model

The Phillips ROI Model is best suited for high-cost, high-visibility training programs where stakeholders demand a rigorous financial justification. It's ideal for strategic initiatives, extensive leadership development programs, or large-scale technical training where a significant investment needs to be validated. Companies like Wells Fargo and Microsoft apply this framework to evaluate major programs and secure ongoing C-suite support.

While more resource-intensive than other methods, its ability to calculate a clear ROI makes it invaluable for proving the L&D function’s direct contribution to the organization's financial success. You can explore the methodology further at the ROI Institute, co-founded by Jack and Patti Phillips.

3. CIPP Model (Context, Input, Process, Product)

Developed by Daniel Stufflebeam, the CIPP Model offers a comprehensive, decision-oriented framework among training evaluation methods. It moves beyond a simple post-mortem analysis and instead focuses on providing timely information for proactive program improvement. The acronym CIPP stands for the four interconnected components it assesses: Context, Input, Process, and Product.

Unlike models that primarily measure end results, CIPP is designed for both formative (improving the program as it runs) and summative (judging its final worth) evaluation. This makes it a powerful tool for ensuring a training initiative not only meets its goals but also adapts and evolves based on real-time feedback and changing needs.

How the Four Components Work

The model’s strength lies in its systematic approach, where each component answers a specific set of questions to guide decision-making throughout the training lifecycle. This structure provides a holistic view, from initial planning to final impact.

- Context Evaluation (What needs to be done?): This stage focuses on identifying the target audience's needs, the problems the training aims to solve, and the organizational goals it supports. It involves a needs assessment to define clear, relevant objectives. For example, a company might use context evaluation to determine that a sales decline is due to a lack of knowledge about a new product line, thereby setting the goal for a product training program.

- Input Evaluation (How should it be done?): Here, the evaluation assesses the resources, strategies, and plans for the training. This includes reviewing the budget, instructional design, materials, and delivery methods. The goal is to choose the most effective and efficient approach. A key question would be, "Is a blended learning approach with e-modules and workshops the best strategy given our budget and employees' schedules?"

- Process Evaluation (Is it being done as planned?): This is a formative check on the program's implementation. It monitors the training as it happens to identify and fix any issues. Data is collected through participant feedback, instructor observations, and activity logs to ensure the program is being delivered with fidelity and quality. For instance, mid-course surveys might reveal that a specific module is confusing, allowing for immediate adjustments.

- Product Evaluation (Did it succeed?): The final stage assesses the outcomes and impact of the training program, both intended and unintended. It measures whether the initial objectives were met by analyzing results like improved performance, enhanced skills, and overall organizational impact. This aligns with measuring ROI and justifying the program’s value, similar to the final levels of other evaluation models.

When to Use This Model

The CIPP Model is particularly effective for large-scale, complex, or long-term training initiatives where continuous improvement is critical. It is ideal for organizations that want to build a culture of learning and adaptation rather than just passing a final judgment on a program. Organizations like the Peace Corps have used the CIPP framework to evaluate and refine their extensive volunteer training programs, ensuring they remain relevant and effective in diverse global contexts. Similarly, universities often apply it to assess faculty development programs for ongoing enhancement. You can explore a deeper dive into its application at the Western Michigan University's Evaluation Center.

4. Kaufman's Five Levels of Evaluation

Roger Kaufman's Five Levels of Evaluation model expands upon traditional frameworks by introducing a crucial external focus. While it shares foundational elements with models like Kirkpatrick's, it adds a societal and client-oriented dimension, urging organizations to look beyond internal results and consider their broader impact. This model reframes training evaluation not just as an internal audit but as a measure of an organization's contribution to its clients and society.

Developed by Roger Kaufman, a key figure in performance improvement, this approach distinguishes between micro-level (individual) and macro-level (organizational) results, ultimately pushing towards a mega-level (societal) perspective. It challenges L&D professionals to align training with outcomes that benefit external stakeholders, positioning training as a strategic tool for corporate social responsibility and sustainable success.

How the Five Levels Work

Kaufman’s model reorganizes and extends the evaluation hierarchy. It begins with inputs and processes before moving through the familiar levels of learning and performance to its unique focus on external impact.

- Level 1: Inputs & Process. This level evaluates the resources, materials, and processes used for the training (the "Enabling" factors). It asks: Were the inputs high-quality and the methods efficient? This is analogous to a process audit, checking the quality of ingredients before judging the final dish.

- Level 2: Reaction. Similar to Kirkpatrick's first level, this gauges participant satisfaction and engagement with the training program. It measures how learners felt about the experience.

- Level 3: Learning. This level measures the acquisition of knowledge and skills. Did participants gain the intended competencies? This is typically assessed through tests, simulations, or skill demonstrations.

- Level 4: Performance. Here, the evaluation focuses on the application of learned skills on the job and the resulting micro-level contributions to the organization. Are employees performing better, and is this impacting team or department goals?

- Level 5: Results. The final level measures the macro-level impact on external clients and society. Did the training lead to improved customer satisfaction, community well-being, or a positive environmental footprint? This is the model’s defining feature, connecting organizational efforts to societal value. For example, a public health training program would use this level to measure a reduction in community infection rates.

When to Use This Model

Kaufman’s model is one of the most comprehensive training evaluation methods and is best suited for organizations committed to social responsibility or those whose success is directly tied to client and community outcomes. It is ideal for non-profits, public sector agencies, and corporations with strong CSR initiatives. For instance, UNESCO has utilized its principles to evaluate global education initiatives. It's the right choice when you need to justify training not just by its internal ROI, but by its tangible, positive impact on the world outside the company walls. You can explore more on its application through the International Society for Performance Improvement.

5. Anderson's Model of Learning Evaluation

Anderson's Model of Learning Evaluation, developed by Merrill Anderson, offers a value-focused framework for assessing training programs, particularly in the realm of e-learning and technology-driven education. It shifts the emphasis from a purely post-program assessment to a more holistic view that aligns training investments with strategic business objectives from the outset.

Unlike models that primarily measure after-the-fact results, Anderson's approach integrates evaluation into the entire training lifecycle. It begins by defining the business needs and expected value, then uses this baseline to measure the program's alignment and ultimate contribution. This makes it one of the most strategic training evaluation methods for organizations focused on optimizing their learning technology investments.

How Anderson's Model Works

This model is built around a comprehensive cycle that connects learning strategy to business value, ensuring that training is not just an activity but a targeted business solution. It typically follows a multi-stage alignment and measurement process.

- Determine Strategic Alignment: The first step involves identifying the key business goals the training is meant to support. This stage answers the question: "Why are we doing this training, and what specific business outcome do we expect?"

- Identify the Best Solution: Before development, the model requires an analysis to determine if training is the right solution and, if so, which modality (e.g., e-learning, blended, instructor-led) will be most effective and efficient.

- Calculate Business Value: This stage involves forecasting the potential value and ROI of the training program. It sets clear financial and performance expectations that will be used for final evaluation. This is where the model is particularly strong.

- Measure Results & Impact: After the training is delivered, data is collected to measure its impact against the pre-defined business metrics. This includes not just learning and behavior but also the financial value generated, connecting directly back to the initial calculations.

This approach ensures that evaluation is not an afterthought but a continuous process woven into the fabric of the learning initiative. For example, Cisco uses this framework to evaluate its extensive online certification programs, ensuring that the skills taught directly align with market demands and deliver tangible value to both the learner and the company.

When to Use This Model

Anderson's Model is exceptionally well-suited for organizations making significant investments in e-learning platforms, learning management systems (LMS), and other educational technologies. It is ideal when stakeholders demand a clear, data-driven business case for training expenditures.

Its focus on forecasting and measuring financial impact makes it perfect for high-stakes projects where justifying ROI is a primary concern. It also provides a robust framework for creating highly effective and targeted learning experiences. By evaluating design and process, the model can inform the creation of adaptive and responsive training, similar to the concepts behind personalized learning pathways. You can learn more about building these tailored educational experiences on mindstamp.com.

6. Brinkerhoff's Success Case Method

Developed by Robert Brinkerhoff, the Success Case Method (SCM) offers a practical and focused alternative to traditional training evaluation methods. Instead of measuring average performance across all participants, SCM deliberately seeks out the extremes. It identifies and rigorously studies the most and least successful cases to understand what factors drive success and what barriers lead to failure.

This method’s power lies in its storytelling and diagnostic capabilities. By analyzing what worked for the high performers and what went wrong for others, organizations gain rich, qualitative insights. These compelling narratives and concrete examples provide powerful evidence of training impact and clear, actionable recommendations for program improvement.

How the Success Case Method Works

SCM follows a targeted process to uncover the stories behind the numbers. It focuses on identifying best practices and critical failure points to create a holistic view of a program’s real-world application.

- Identify the Extremes. The first step is to survey a group of training participants to identify the outliers. The survey aims to separate those who have been highly successful in applying their new skills from those who have struggled or failed to do so.

- Conduct In-Depth Interviews. Once the most and least successful individuals are identified, the evaluator conducts detailed interviews. For success cases, the goal is to understand precisely what they did, how they overcame challenges, and what organizational factors supported them. For non-successful cases, the interviews explore the obstacles they faced.

- Analyze and Document Findings. The information gathered is analyzed to pinpoint the key enablers and barriers to training transfer. The findings are then documented as compelling success stories and cautionary tales, highlighting specific behaviors and environmental factors.

- Communicate and Recommend. The final step involves sharing these stories with key stakeholders. The narrative format makes the results memorable and persuasive, leading to clear, data-driven recommendations for enhancing the training program and the supportive work environment.

When to Use This Model

The Success Case Method is an excellent choice when you need to quickly understand why a training program is or isn't working. It's particularly useful when resources for a full-scale quantitative analysis are limited or when you need to provide stakeholders with persuasive, qualitative evidence of impact. Companies like IBM have used SCM to evaluate leadership development initiatives, while The World Bank applies it to assess capacity-building programs. You can discover more about building effective evaluation into your programs by exploring various learning assessment strategies on mindstamp.com.

7. Kraiger's Three-Dimensional Framework

The Kraiger, Ford, & Salas (KFS) Model offers a more academic and granular approach to training evaluation methods by focusing on the specific types of learning outcomes. Developed by Kurt Kraiger and his colleagues in 1993, this framework categorizes learning into three distinct dimensions: cognitive, skill-based, and affective. It moves beyond a simple "did they learn it?" question to ask, "what kind of learning occurred?"

This model's strength lies in its precision. By breaking down learning into these three categories, it allows Learning and Development (L&D) professionals to create highly targeted assessments that align directly with the training's specific goals. It provides a robust, research-backed foundation for measuring nuanced changes in an employee's knowledge, abilities, and attitudes.

How the Three Dimensions Work

The framework proposes that effective training evaluation requires measuring outcomes across one or more of these interconnected dimensions. This provides a holistic view of the learning that has taken place, ensuring that critical outcomes aren't overlooked.

- Cognitive Outcomes. This dimension focuses on what participants know. It measures the acquisition of knowledge, from basic recall of facts to the development of complex mental models and strategic thinking. Assessments might include multiple-choice tests, essays, or problem-solving simulations that reveal how an employee organizes and applies information.

- Skill-Based Outcomes. This dimension evaluates what participants can do. It measures the development of technical or motor skills and the proficiency with which tasks are performed. Evaluation methods here are practical and performance-oriented, such as hands-on demonstrations, role-playing scenarios, or work sample assessments where an employee performs a job-related task.

- Affective Outcomes. This dimension assesses how participants feel. It focuses on changes in attitudes, beliefs, motivation, and self-efficacy (an individual's belief in their ability to succeed). This is often measured through attitudinal surveys, self-reported confidence scales, or observational data on how an employee approaches their work and interacts with colleagues post-training.

When to Use This Model

Kraiger's Three-Dimensional Framework is particularly valuable for organizations that need to design highly specific and valid assessments tied directly to learning objectives. It is ideal for complex training initiatives where success depends on a mix of knowledge, practical skills, and mindset shifts. For example, military training programs use it to ensure soldiers not only know procedures (cognitive) and can execute them (skill-based), but also have the confidence and mindset to perform under pressure (affective). Similarly, it is a powerful tool in medical education and high-stakes corporate technical training, where all three learning dimensions are critical for on-the-job competence. Find out more about its application in the original research published in the Journal of Applied Psychology.

Training Evaluation Methods Comparison matrix

From Data to Decisions: Choosing and Implementing Your Evaluation Strategy

Navigating the landscape of training evaluation methods can feel like choosing from a complex menu. We've journeyed through the foundational levels of Kirkpatrick, the financial rigor of Phillips' ROI, the holistic perspective of the CIPP Model, and the outcome-focused approaches of Kaufman and Brinkerhoff. Each framework, from Anderson's value-centric model to Kraiger's cognitive and behavioral dimensions, offers a unique lens through which to measure the impact and effectiveness of your learning initiatives.

The most critical takeaway is that there is no single, universally superior model. The quest is not to find the "best" method, but to identify the most appropriate method for your specific context. The ideal choice hinges on a careful consideration of your organizational goals, the scope of the training program, the expectations of your stakeholders, and the resources you have at your disposal. A one-hour compliance webinar simply does not require the same level of analytical depth as a year-long, enterprise-wide digital transformation program.

Moving from Theory to Actionable Insight

The true power of these models is unlocked when you move beyond academic understanding and into strategic implementation. Your evaluation process should not be an afterthought tacked on at the end of a program. Instead, it must be woven into the very fabric of your instructional design from the earliest stages.

Begin with the end in mind. Before you even develop content, ask the crucial questions:

- What specific business problem are we trying to solve?

- What behaviors need to change on the job to solve it?

- What knowledge or skills must learners acquire to enable that behavior change?

- How will we know, with tangible data, that this change has occurred?

Answering these questions first allows you to select a framework, or even a hybrid of several, that will provide the precise data you need. For instance, you might use Kirkpatrick's Level 1 and 2 for immediate feedback and learning checks, while simultaneously employing Brinkerhoff's Success Case Method to gather powerful qualitative stories that illustrate the program's peak impact for executive stakeholders.

Integrating Strategy with Modern Technology

Choosing the right framework is only half the battle. The other half is efficiently and effectively collecting the data required to fuel it. This is where modern technology becomes an indispensable partner, transforming evaluation from a burdensome, manual task into a seamless, automated process.

Imagine trying to measure Kirkpatrick's Level 2 (Learning) across a global sales team. Traditional post-training surveys are often met with low response rates and recall bias. However, by embedding interactive questions, polls, and knowledge checks directly within your video training modules, you can capture comprehension data in real time, at the precise moment of learning. This not only provides more accurate data but also enhances learner engagement, turning passive viewing into an active learning experience.

Ultimately, mastering these training evaluation methods is about shifting your function's narrative. It’s about moving from being perceived as a cost center to being celebrated as a strategic business partner that drives measurable results. By strategically selecting your evaluation framework and leveraging technology to streamline data collection, you build a powerful case for the undeniable value of learning and development. You create a virtuous cycle of continuous improvement, where data-driven insights inform better training, which in turn delivers stronger business outcomes and proves the profound impact of your work.

Ready to automate your learning evaluation and capture real-time comprehension data? Mindstamp makes it easy to add interactive quizzes, questions, and polls directly into your training videos, seamlessly integrating Kirkpatrick Level 2 evaluation into your content. Transform your training from a passive lecture into an engaging, data-rich experience by visiting Mindstamp to start your free trial today.

Get Started Now

Mindstamp is easy to use, incredibly capable, and supported by an amazing team. Join us!

Try Mindstamp Free