Evaluate Training Effectiveness Beyond the Basics

To really know if your training is working, you have to go way beyond the simple "Did you enjoy the session?" surveys. The real goal is to measure how that learning actually translates into tangible business results. It’s a process of connecting the dots—from what people thought of the training, to what they learned, how their behavior changed, and finally, how it impacted the company's bottom line. This is how you shift training from being seen as an expense to a proven driver of growth.

Why Evaluating Training Effectiveness Matters More Than Ever

In today's competitive world, every dollar spent needs to be justified. When Learning & Development (L&D) operates without clear metrics, it's easy for it to get labeled as a "feel-good" cost center instead of the strategic partner it should be. A systematic approach to evaluation is the only way to demonstrate its true value and lock in its spot as a critical business function.

Think about the risks of not evaluating your training properly. You could be pouring money into programs that don't fix real skills gaps, leading to wasted budgets and, even worse, disengaged employees who feel their development isn't a priority. Over time, that gap between your team's skills and what the business needs just gets wider and wider.

Let’s be honest: evaluating training can feel like a lot of work. But the payoff is huge, providing clarity and direction that benefits the entire organization.

Before we dive into the "how," it's helpful to see the "why" laid out clearly. Systematically measuring your training impact does more than just prove value; it creates a positive feedback loop for continuous improvement.

Core Benefits of Effective Training Evaluation

This table isn't just a summary; it's a roadmap. Each of these benefits represents a powerful argument you can make to leadership, backed by the data you'll learn how to collect.

Connecting Training to Business Outcomes

The whole point of evaluation is to draw a direct line from a training session to a measurable business outcome. It’s one thing to say a training was "well-received." It's another thing entirely when you can prove a new sales training program directly contributed to a 15% increase in quarterly revenue. That gets attention.

Imagine linking a new compliance course to a 40% reduction in safety incidents. That’s not a soft metric; it’s undeniable proof of value.

This data-driven approach completely changes the conversation from "How much does this cost?" to "What's the return on this investment?" The numbers tell a powerful story. Research shows that organizations with strong, formalized training programs see a 218% higher income per employee and enjoy a 24% higher profit margin than companies that don't prioritize it. You can read the full research on training's financial impact to dig into the details.

Key Takeaway: Evaluating training isn't just about defending the L&D budget. It’s about giving the C-suite hard evidence that learning initiatives are directly fueling profitability, productivity, and your competitive edge.

Building a Roadmap for Improvement

Beyond just proving your worth, solid evaluation gives you a clear roadmap for getting better. The insights you gather are gold, helping you answer the really important questions that shape your L&D strategy:

- Engagement: Which formats are actually working? Is it the interactive video from a tool like Mindstamp, or are in-person workshops still king for certain topics?

- Content: Are the training materials relevant and easy to understand? Do they actually apply to what people do every day?

- Application: Here's the big one. Are employees actually using their new skills on the job weeks or even months later?

Answering these questions allows you to tweak, iterate, and constantly optimize your programs. You ensure that every new initiative you launch is more effective and impactful than the last. This guide will walk you through the models, metrics, and tools you need to build a powerful evaluation framework—one that proves value and drives real, lasting change.

Choosing Your Evaluation Framework

To really get a grip on whether your training is working, you need more than just a random collection of metrics. You need a game plan, a structured framework that guides you from those initial gut reactions all the way to long-term business impact. Without a model, you're just collecting disconnected data points that don't tell a coherent story.

Think of the right framework as your blueprint. It makes sure you're measuring what actually matters at each stage of the learning process, helping you build a rock-solid case for your training programs.

Let's dive into some of the most trusted and practical models people in the field use every day.

The Foundational Kirkpatrick Model

If you've been in L&D for more than a minute, you've heard of the Kirkpatrick Model. It’s pretty much the gold standard for training evaluation, and for good reason. It breaks down the entire process into four clear, progressive levels, giving you a complete picture of your program's ripple effect. It's how you move past simple "happy sheets" to see if training actually changed what people know, how they behave, and ultimately, the company's bottom line.

Here’s how each level plays out in the real world:

Level 1: ReactionThis is all about how participants felt about the training. Was it engaging? Relevant? A good use of their time? While some dismiss this as fluff, this feedback is your first and fastest indicator for improving the learning experience itself.

- Scenario: You’ve just wrapped up a virtual workshop on new project management software. You immediately send out a quick survey asking folks to rate the instructor's clarity, the relevance of the content to their daily work, and how easy the platform was to use. This tells you instantly if the session was a home run or a total snooze-fest.

Level 2: LearningThis is where you measure the "aha!" moments. Did people actually pick up new knowledge, skills, or even change their attitudes? This is where you prove that learning actually happened.

- Scenario: Before rolling out a new cybersecurity awareness training, you have employees take a pre-training quiz to see where they stand. After they finish the interactive modules, they take a post-training assessment. Seeing a huge jump in scores—say, from an average of 45% to 92%—is concrete proof of knowledge gain.

Level 3: BehaviorThis is the make-or-break level. It’s the leap from theory to practice. Are employees taking what they learned and actually using it on the job? This is where your training starts to deliver real, tangible value.

- Scenario: A sales team just finished training on a new consultative selling method. A month later, you and their managers use a shared observation checklist during calls to see how often they're using the new open-ended questions and active listening skills. You'd also check the CRM to see if there's an uptick in logged follow-up activities.

Level 4: ResultsThis is the big one. The final level connects your training directly to business outcomes. It answers that ultimate question from leadership: "So what? What was the business impact?"

- Scenario: Six months after a leadership development program for mid-level managers, the HR team digs into departmental data. They discover that teams led by the newly trained managers show a 15% bump in employee engagement scores and a 10% drop in voluntary turnover compared to a control group. That's a result you can take to the bank.

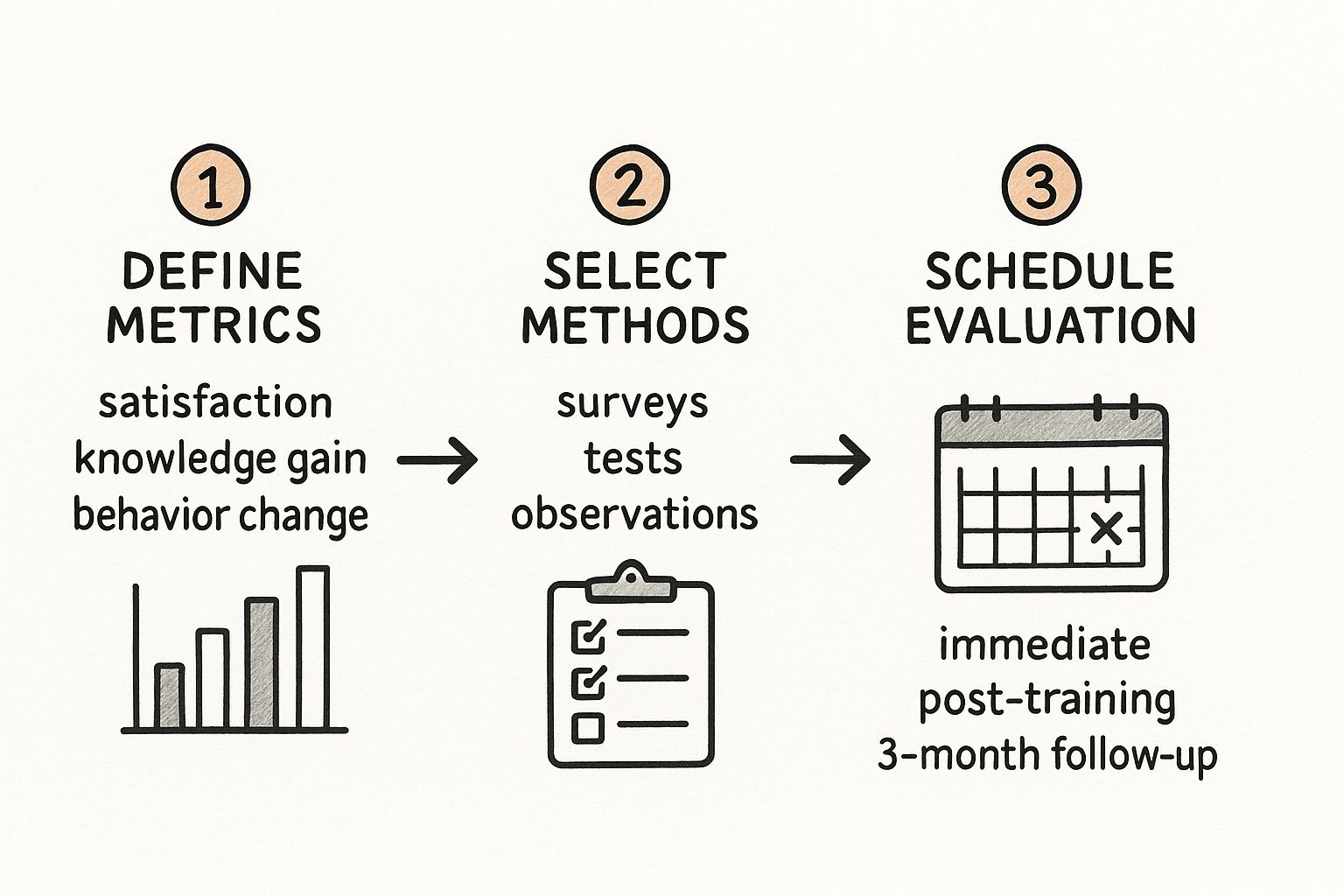

This simple infographic breaks down how you can plan your own evaluation, from defining what you'll measure to scheduling the follow-up.

Mapping out your metrics, methods, and timing is a straightforward but incredibly powerful way to make sure your evaluation is both strategic and practical.

Adding a Financial Lens with the Phillips ROI Model

When you need to speak the language of the C-suite, the Phillips ROI Model is your best friend. It doesn't reinvent the wheel; it just builds on Kirkpatrick's work by adding a fifth, and absolutely critical, level.

Level 5: Return on Investment (ROI)This level takes those Level 4 results and puts a dollar sign on them. It directly compares the monetary value of the outcomes to the total cost of the training program. The final number is a clear, financially-driven metric of success.

To calculate ROI, you isolate the monetary benefits from the training (like more sales or lower operational costs), subtract the program costs, and then divide that number by the program costs. An ROI of 150% means for every $1 you invested, the company got its dollar back plus an additional $1.50 in value.

This model forces you to be rigorous about a program's financial contribution, which makes it an unbelievably powerful tool for justifying your L&D budget and proving you're a profit center, not a cost center.

Alternative Frameworks for Specific Needs

While Kirkpatrick and Phillips get most of the spotlight, other models can be a better fit for certain situations.

CIRO Model (Context, Input, Reaction, Outcome): This one is fantastic for being proactive. It puts a heavy emphasis on the "Context" and "Input" stages before training even starts. The goal is to nail the needs analysis and ensure the program is designed perfectly from the get-go.

Brinkerhoff's Success Case Method (SCM): Instead of looking at averages, SCM zooms in on the outliers. It identifies the people who were most and least successful after the training and digs deep to find out why. This qualitative approach gives you rich, compelling stories and powerful insights into what makes training stick—or fall flat.

Ultimately, picking the right framework comes down to your goals. Need to prove financial value? Go with Phillips ROI. Trying to understand the human story behind your results? SCM might be your best bet. Honestly, I often find that a hybrid approach, borrowing elements from different models, gives you the most complete and useful picture.

The Metrics and KPIs That Actually Matter

Frameworks like the Kirkpatrick Model give you a map, but metrics are the destinations. To really understand if your training is working, you have to get comfortable with the numbers. This means picking specific Key Performance Indicators (KPIs) that paint a clear, data-driven picture of your program's impact.

A great way to approach this is by organizing your metrics along the Kirkpatrick levels. It’s a practical method that ensures you’re covering the entire journey, from that first "aha!" moment in training all the way to bottom-line business results.

Measuring the Learning Itself

This is Level 2 of Kirkpatrick, and it’s all about a simple question: Did they actually learn what you taught them? Completion rates are nice, but they don't prove knowledge was transferred. You need to measure the change.

The most direct way to do this is with assessments. A pre-training assessment gives you a baseline. Then, a post-training assessment shows you the "delta"—the true knowledge gain. Imagine a team scores an average of 40% on a pre-test about new compliance protocols but hits 95% afterward. That’s concrete proof of learning.

But don’t just rely on multiple-choice questions. For more nuanced skills, you can get creative:

- Skill Application Tests: Ask employees to perform a simulated task. If you’re teaching new software, have them use a specific function in a sandbox environment.

- Confidence Ratings: Before and after training, have learners rate their confidence in a skill on a 1-10 scale. A big jump is a powerful indicator of perceived competence.

- Interactive Quizzes: Tools like Mindstamp let you embed questions right into your training videos. This gives you real-time data on who is understanding what, and at which specific point in the content.

For more ideas on how to design these measurements, check out these 10 effective learning assessment strategies that can help you gather much more meaningful data.

Tracking On-the-Job Behavior Change

This is Level 3, where the rubber meets the road. It’s fantastic that people learned something, but are they using it? Measuring behavior change is crucial, and it means looking beyond the training session itself, usually about 30 to 90 days later.

Manager observations are a classic tool here. A simple checklist can help a manager spot whether an employee is applying new sales techniques or customer service scripts.

Pro Tip: Don't just ask managers, "Is the training working?" Give them a specific, observable checklist. For a communication skills workshop, this could include points like, "Used active listening techniques" or "Successfully de-escalated a customer complaint." This turns subjective opinion into structured data.

Other powerful metrics for behavior change include:

- 360-Degree Feedback: Gathering anonymous feedback from peers, direct reports, and managers provides a well-rounded view of an employee's new behaviors in action.

- New Process Adoption Rates: If the training was about a new system, you can track this directly. What percentage of the team is using the new CRM feature? How many projects are following the new agile methodology?

- Quality Assurance Scores: For roles in customer support or manufacturing, comparing QA scores from before and after the training can show a direct improvement in performance quality.

Connecting Training to Business Results

We’ve now reached Level 4—the ultimate goal. This is where you connect those behavior changes to tangible business outcomes. This is how you prove your training initiative wasn't just a line item expense, but a strategic investment.

To pull this off, you have to tie your training to existing business KPIs. For example:

The trick is to identify these KPIs before you even start building the training. This ensures your program is laser-focused on moving the needles that leadership actually cares about.

Calculating Your Training ROI

Finally, we get to the Phillips Model's Level 5: Return on Investment (ROI). This metric boils down all your Level 4 results into a single, undeniable financial figure.

The formula itself is pretty straightforward:Training ROI (%) = [(Monetary Benefits - Training Costs) / Training Costs] x 100

The real challenge? Isolating the training's impact. A jump in sales could be from your training, a new marketing campaign, or just a hot market. To build a credible case, you need control groups. Compare the performance of a trained team against a similar, untrained team. The difference in their results is a strong indicator of your training's unique contribution.

For instance, if your trained sales team increased their average deal size by $5,000 while the control group only saw a $500 increase, you can confidently attribute $4,500 of that gain directly to your program. Now you’ve transformed your evaluation from a simple report into a powerful business case.

Using Technology to Streamline Your Evaluation

Let's be honest: wrestling with spreadsheets and chasing down manual feedback forms can make evaluating training a total nightmare. It's a massive time sink.

Switching to modern tech isn't just about clawing back those hours. It’s about unlocking a level of insight that manual tracking could never give you. When you let technology handle the grunt work, you free yourself up to focus on what actually matters—analyzing the data and refining your strategy.

It allows you to build a connected system that tracks the entire learning journey. You can follow an employee from their first click in a module all the way to their long-term impact on business goals, without getting buried in administrative tasks.

Harnessing Your Learning Management System

Your Learning Management System (LMS) is ground zero for evaluation data. It's the central hub for your training efforts and, frankly, a goldmine of foundational metrics. An LMS automatically logs the basic, yet crucial, data points that kick off your entire analysis.

Inside your LMS dashboard, you'll find the first layer of evidence:

- Completion Rates: The most basic metric, but it tells you who actually finished the course. It’s your baseline for engagement and accountability.

- Assessment Scores: This is a direct line to Kirkpatrick's Level 2 (Learning). Tracking quiz and test results is a straightforward way to see if knowledge was actually transferred.

- Time Spent on Modules: This can be surprisingly revealing. It shows you which topics were the most engaging or, on the flip side, where people got stuck and might need more support.

These aren't just vanity metrics; they provide a quick, high-level snapshot of how your training was received and are the first place you should look to gauge effectiveness at scale.

Gaining Deeper Insights with Interactive Video

A standard, linear video is a one-way street. But interactive video? That’s a two-way conversation.

Tools like Mindstamp let you embed questions, polls, and clickable hotspots directly into your training videos. This completely changes the game for measuring comprehension and engagement. Instead of waiting for a survey at the very end, you get immediate feedback, right in the moment.

You can see exactly which concepts are landing and which are causing confusion, all tied to a specific timestamp in the video. This opens the door for real-time clarification and gives you a much richer picture of the learning process as it happens.

This screenshot from Mindstamp shows just how easy it is to add interactive elements like buttons and questions right onto the video's timeline.

The visual editor makes it simple to transform any passive video into an active learning experience that doubles as a powerful data collection tool.

Key Insight: Interactive video closes the gap between content delivery and comprehension assessment. You no longer have to guess if learners understood a key point; you can ask them right then and there.

Imagine you're rolling out training on a critical new safety protocol. You could embed a quick multiple-choice question immediately after explaining the procedure. The collective answers instantly tell you if the message hit home or if you need to reinforce it. For compliance and skills training where understanding is non-negotiable, that's invaluable.

Integrating Surveys and Performance Tools

To get the full 360-degree view, you have to connect learning data with what’s actually happening on the job. This is where dedicated survey and performance management tools create a truly cohesive evaluation system.

Automated Post-Training Surveys: Use tools like SurveyMonkey or Google Forms to automatically trigger feedback surveys the second an employee finishes a course. You can ask targeted questions about the content's relevance, the instructor's effectiveness, or the learning environment itself.

Performance Management Software: Your company’s performance software is already tracking key performance indicators (KPIs), manager feedback, and progress toward goals. By integrating this with your LMS, you can start to draw clear, direct lines between a specific training program and a measurable bump in an employee's quarterly performance review.

This kind of integrated approach is the secret to systematically measuring behavioral change (Kirkpatrick's Level 3) and bottom-line business results (Level 4). It connects the dots between learning and doing, arming you with the hard evidence needed to prove your training's value to leadership.

How Effective Training Drives Employee Retention

When you take the time to properly evaluate training effectiveness, you're doing a lot more than just checking a box. You're actually tracking one of the most powerful drivers of employee loyalty. Great training is a killer retention tool, but only if you can actually see and show its impact.

Let's be real: employees today aren't just looking for a paycheck. They want a future. They are actively seeking out companies that are willing to invest in their personal and professional growth.

When your training programs lead to real, demonstrable skill progression, you're sending a crystal-clear message: we value you, and we're invested in where your career is headed. This is what turns training from a corporate chore into a tangible benefit that makes people want to stick around.

From Training Satisfaction to Retention Risk

Getting a high "training satisfaction" score is nice, but it's a vanity metric. The real magic happens when you connect the dots between training, skill development, and career progression. Your evaluation data can become a surprisingly accurate crystal ball for predicting potential retention problems.

Put yourself in an employee's shoes. If they feel like they’re treading water, not learning anything new, or see no clear path forward, their eyes start to wander. That’s when LinkedIn profiles get polished up. This is where your data can sound the alarm.

Imagine these two scenarios:

- Scenario A: A department gives its training rave reviews but, three months later, scores poorly on skill application assessments. That's a huge red flag. It means the training isn't sticking, which breeds frustration and kills engagement.

- Scenario B: Another team shows massive, measurable skill gains after a new certification program. Shortly after, two people from that team get promoted into roles that need those exact skills. That’s a powerful success story that tells everyone else: "Stick with us, and you can grow, too."

By looking for these patterns, you can spot teams at risk of turnover long before the resignation letters start piling up.

Building a Culture That Keeps People

Companies that consistently measure and broadcast their training's impact build an incredible employer brand. They create a culture of growth that not only attracts top talent but, more importantly, convinces them to stay.

It's all about proving you offer more than just a salary. A solid, structured onboarding process paired with ongoing development opportunities is the foundation. In fact, organizations with structured onboarding see an 82% improvement in employee retention. That’s a massive win for workforce stability.

But this commitment can't be a secret. You have to share the results. When a new training program leads to a 20% jump in team efficiency, shout it from the rooftops. When an employee uses skills from an interactive video course to solve a huge client problem, make them a hero.

Key Takeaway: Proving that your training leads to real career opportunities and better performance is how you build true loyalty. People stay where they can see a future for themselves, and your evaluation data is the proof.

Properly measured training is about so much more than a performance bump. It builds a stable, motivated, and skilled team that sees a long and rewarding future with you. This makes the ability to evaluate training effectiveness a non-negotiable part of any serious retention strategy.

You can learn more about using interactive video to improve training outcomes in our guide on the topic.

Answering Your Key Evaluation Questions

When you start to get serious about evaluating training effectiveness, a lot of practical questions pop up. It's one thing to understand the theory, but applying it in the real world can feel a bit messy. Let's tackle some of the most common questions we hear from L&D pros to give you some clear, straightforward answers.

Think of this as your quick-reference guide for getting over those common hurdles.

What Is the Hardest Part of Evaluating Training Effectiveness?

Hands down, the biggest challenge is proving the direct link between your training and the company's bottom line (that’s Kirkpatrick's Level 4). It’s the classic correlation vs. causation headache. Did sales really jump because of your new program, or was it the big marketing campaign that launched at the same time?

To get a clear answer, you have to think like a scientist. The gold standard is using control groups. Just compare the performance of a team that went through the training against a similar team that didn't. The difference in their results is the most compelling evidence you can present for the training's unique impact.

If control groups aren't an option, you can still build a strong case:

- Trend Line Analysis: Pull performance data for a good while before the training and see how it stacks up against the period after.

- Forecasting Models: Use your historical data to project what performance would have looked like without the training. Then, compare that forecast to what actually happened.

The key is to plan for this from the very beginning, long before you launch. It's how you build a credible story.

How Often Should We Evaluate Our Training Programs?

Evaluation isn't a one-and-done task you can just check off a list. It’s an ongoing process, and timing is everything. Each level of the Kirkpatrick model really has its own ideal schedule for measurement.

A solid rhythm usually looks something like this:

- Reaction (Level 1): Measure this immediately after the training wraps up. You want to capture people's gut reactions while the experience is still fresh in their minds. A quick digital survey is perfect for this.

- Learning (Level 2): Test this right before and right after the program. This pre- and post-assessment model is the clearest way to show a direct knowledge gain.

- Behavior (Level 3): This one requires some patience. You need to check for on-the-job changes around 30, 60, or 90 days post-training. This gives employees enough time to actually start using what they've learned.

- Results (Level 4): This is your long game. It’s best to review these metrics quarterly or even annually. You need enough time to align with the company's business cycles to show a real, meaningful impact.

Can I Effectively Evaluate Soft Skills Training?

Yes, absolutely. You just have to change your approach. Instead of testing for right-or-wrong knowledge, you focus on observing behavior. You can't give a multiple-choice quiz on "empathy," but you can absolutely measure the actions that demonstrate it.

For soft skills, you’ll want to zero in on Level 3 (Behavior) and Level 4 (Results).

Pro Tip: Use 360-degree feedback. Gathering structured, anonymous input from a person’s manager, peers, and direct reports—both before and after the training—gives you a fantastic, well-rounded view of any behavioral shifts.

You can also tie the training to business KPIs that are influenced by these skills. For instance, a communication program for managers could be linked to a bump in their team's employee engagement scores or a drop in customer complaints. For more on this, check out our comprehensive guide on how to measure training effectiveness.

What Is Considered a Good ROI for a Training Program?

This is the million-dollar question, and the honest answer is: it depends. There's no single magic number for a "good" ROI, because it's completely tied to your industry, the cost of the program, and what you were trying to achieve.

That said, any ROI greater than 0% is a technical win, because it means the training paid for itself.

Most L&D teams I know aim for an ROI of 100% or more. Hitting that number means the program generated double its cost in value—a fantastic result. But really, the most important thing isn't hitting some arbitrary number. It's about demonstrating a clear, positive financial return. The ROI calculation is a powerful way to speak leadership's language and prove that L&D is a strategic partner, not just a cost center.

Ready to get real-time data from your training videos? Mindstamp turns passive content into an engaging, data-rich experience. Embed quizzes, track engagement, and see exactly what your learners understand. Learn more and get started at https://mindstamp.com.

Get Started Now

Mindstamp is easy to use, incredibly capable, and supported by an amazing team. Join us!

Try Mindstamp Free