Assessing Learning with Interactive Video: Boost Engagement

Let's be honest: traditional quizzes often feel more like a memory test than a real measure of understanding. For a long time, they were the standard in both education and corporate training. But we're starting to see their limits. Effective learning assessment requires dynamic, in-context methods that show us what someone truly comprehends and how they can apply it, not just what they can recall for a test.

This means we need to move past those static, end-of-module tests and toward interactive experiences that give us a window into how learners are actually thinking.

Moving Beyond the Traditional Quiz

The classic quiz has its place, but it has a fundamental flaw: it asks learners to spit back facts after the learning is supposedly finished. It's a final checkpoint, but it doesn't tell the whole story. Did they really get the concept, or did they just cram enough to pass the test? It’s a question that keeps a lot of trainers and educators up at night.

This is where interactive video completely changes the game. You're no longer just pushing content at a passive viewer. By embedding questions, clickable hotspots, and instant feedback directly into the video, you start assessing learning in the moment. Instead of waiting until the very end, you can check for understanding right after you’ve explained a tricky concept.

Shifting from Recall to Application

Think about a standard safety training video. The old way would be to make someone watch the whole thing, then hit them with a 10-question quiz.

A much better approach? Pause the video right after you’ve shown how to handle a hazardous chemical and ask, "What is the very next step you should take?"

This simple, in-context question does a few powerful things all at once:

- Immediate Reinforcement: It forces the learner to actively apply the information they just saw.

- Actionable Data: If a lot of people get it wrong, you know exactly which part of your training isn't landing.

- Keeps Them Engaged: It turns the learner from a passive observer into an active participant. They know they need to pay attention to move forward.

The real goal here is to measure engagement and application, not just memory. When you assess within the flow of learning, you get a much richer picture of someone's thought process. This creates a foundation for more meaningful data and, ultimately, better outcomes.

This shift transforms assessment from a final, often dreaded, hurdle into a natural part of the learning journey. The data you get back isn't just a score anymore; it's a detailed map of your audience's comprehension, showing you exactly where they're confident and where they're struggling. That’s insight you can actually use to improve your materials and support your learners.

To see just how different these approaches are, let's break them down side-by-side.

Traditional vs Interactive Video Assessment Methods

The table below highlights the key differences between legacy assessment techniques and the more modern, effective approach offered by interactive video.

As you can see, interactive video assessment isn't just a fancier quiz. It represents a fundamental change in how we measure and support learning, providing deeper insights and a far more effective experience for everyone involved.

How to Build Your First Video Assessment

So, where do you begin when creating your first video assessment? It all starts with the video itself. But don't worry, this doesn't mean you need a Hollywood-level production budget. I've found that the most effective assessments often start with content you already have.

Think about your existing video assets. Do you have a recorded lecture, a detailed product demo, or maybe a soft skills training that walks through a specific scenario? Any of these can be a perfect foundation, as long as the video has clear, specific learning goals.

Once you’ve picked your video, the real magic happens: adding the interactive elements. A classic mistake I see people make is tacking a quiz on at the very end. A far better strategy is to sprinkle your questions throughout the video at key moments. For example, right after you've broken down a tricky concept, pause the action and drop in a multiple-choice question. This is your chance to check for understanding in real time.

This simple shift changes everything. It turns what would have been a passive viewing experience into an active learning session, keeping your audience locked in and thinking, not just waiting for the progress bar to finish.

Crafting Questions That Truly Assess

Now, let's talk about the questions themselves. Their quality is just as crucial as their placement. It's easy to fall back on simple recall questions like, "What was the name of the feature we just discussed?" Instead, push your learners to think more deeply. You want to see if they can actually apply what they've learned.

Here are a few ways I like to frame questions to get beyond basic recall:

- Scenario-Based Questions: Give them a mini-case study. Ask something like, "Based on the process you just saw, what's the next logical step here?"

- Best-Choice Questions: Offer a few good options, but ask the learner to pick the most effective solution. This tests nuance and critical thinking.

- Open-Ended Feedback: Use free-response questions like "Why do you think this approach is better?" to get a window into their thought process.

In Mindstamp, you can see how easy this is. The editor lets you drag different question types right onto your video’s timeline. You can nail the timing down to the exact second, ensuring your check-in aligns perfectly with the content you just delivered.

A well-placed, thoughtful question does more than just test knowledge; it reinforces it. By prompting learners to actively retrieve and apply information, you are strengthening the neural pathways associated with that concept, making the learning more durable.

Ultimately, your goal is to make the assessment feel like a helpful, integrated part of the learning journey, not a pop quiz that just gets in the way. If you want to get into the nuts and bolts of the tools, our guide on how to create interactive video is a great next step.

The Bigger Picture on Learning and Access

Doing this work—creating truly effective learning assessments—has an impact that goes far beyond a single training module. It connects to the broader mission of making education more equitable and accessible for everyone.

Data from the Global Partnership for Education highlights just how critical systemic investment is. Their efforts have helped get nearly 10 million more children into school. Yet, with 16% of primary-school-age children in their partner countries still not attending, it’s a stark reminder that smart, data-informed educational strategies are essential to find and fix these gaps. Targeted funding and better policies are paving the way, showing how we can expand access to quality education for all.

Using Clicks and Hotspots to Assess Learning

Not every learning assessment needs to be a formal question-and-answer session. Sometimes, the most powerful insights come from simply watching what catches a learner's eye. This is where interactive hotspots and clickable video elements really shine, giving you a way to assess learning that's more about observation than interrogation.

Instead of a pop-quiz, you’re inviting them to explore. This simple shift changes the entire dynamic from a test to a self-guided discovery. It's a low-pressure method for measuring engagement that reveals what people instinctively find important, interesting, or just plain confusing. The data you get is a whole different beast.

Gauging Interest and Identifying Knowledge Gaps

Picture a training video walking employees through a new software interface. You could layer hotspots over key features, with each click revealing a quick text explanation or a short video deep-dive on that specific function.

This is where the magic happens. By tracking which hotspots get clicked, you start gathering some incredibly valuable intel.

Let's say 70% of your viewers click the hotspot over the "Advanced Reporting" button, but only 10% bother to check out "Dashboard Customization." You've just uncovered a huge piece of the puzzle. That click data tells you the reporting feature is either a major point of interest or a significant source of confusion for your team.

This isn't just about counting clicks; it's about understanding intent. You can use this information to:

- Pinpoint complex topics: A flurry of clicks on one specific hotspot is a massive red flag that learners need more support in that area.

- Measure genuine curiosity: See which optional, deep-dive elements people choose to explore. This is a great indicator of their engagement level.

- Fine-tune your next video: Use the data to create follow-up content that directly addresses the most-clicked (and therefore most critical) topics.

Assessing learning through clicks moves beyond simple right or wrong answers. It measures what captures attention and prompts action, giving you a real-world look into the learner's mindset and priorities.

A Real-World Scenario in Sales Training

Let's put this into practice with a sales training scenario. You’ve created a video that simulates a client meeting, and the goal is for your sales reps to identify the subtle buying signals the client gives off.

Instead of asking a stale question like, "What was the buying signal at the 2:45 mark?" you can make the assessment feel much more active and true-to-life.

Right when the client actor leans forward and asks about pricing specifics, you could flash a subtle, clickable hotspot over them for just a few seconds with a simple prompt: "What does this mean?"

This approach doesn't just test a rep's memory of the training material; it tests their ability to recognize a critical moment as it happens—just like they would in a real conversation. Tracking who clicks that hotspot and who misses it entirely gives you a much richer, more meaningful assessment than any multiple-choice question ever could.

You’re no longer just evaluating their knowledge. You're evaluating their situational awareness, a crucial skill that standard quizzes simply can't measure. This click-based data adds a vital, practical layer to your understanding of their true readiness.

Creating Personalized Learning Paths with Branching

Imagine an assessment that doesn't just grade a learner, but actively adapts to them in real time. That's the real magic of using branching logic in your video content. It lets you build dynamic, truly personalized learning paths that change based on how someone answers your questions.

Frankly, this is a massive leap forward for assessing learning. We're moving away from the old one-size-fits-all model and into a far more responsive, individualized experience.

With branching, a correct answer can instantly send a high-achiever to more challenging material. But an incorrect one? That can guide a struggling viewer to a quick remedial segment that re-explains the concept they just missed. You're supporting learners at both ends of the spectrum, keeping top performers engaged while ensuring no one gets left behind.

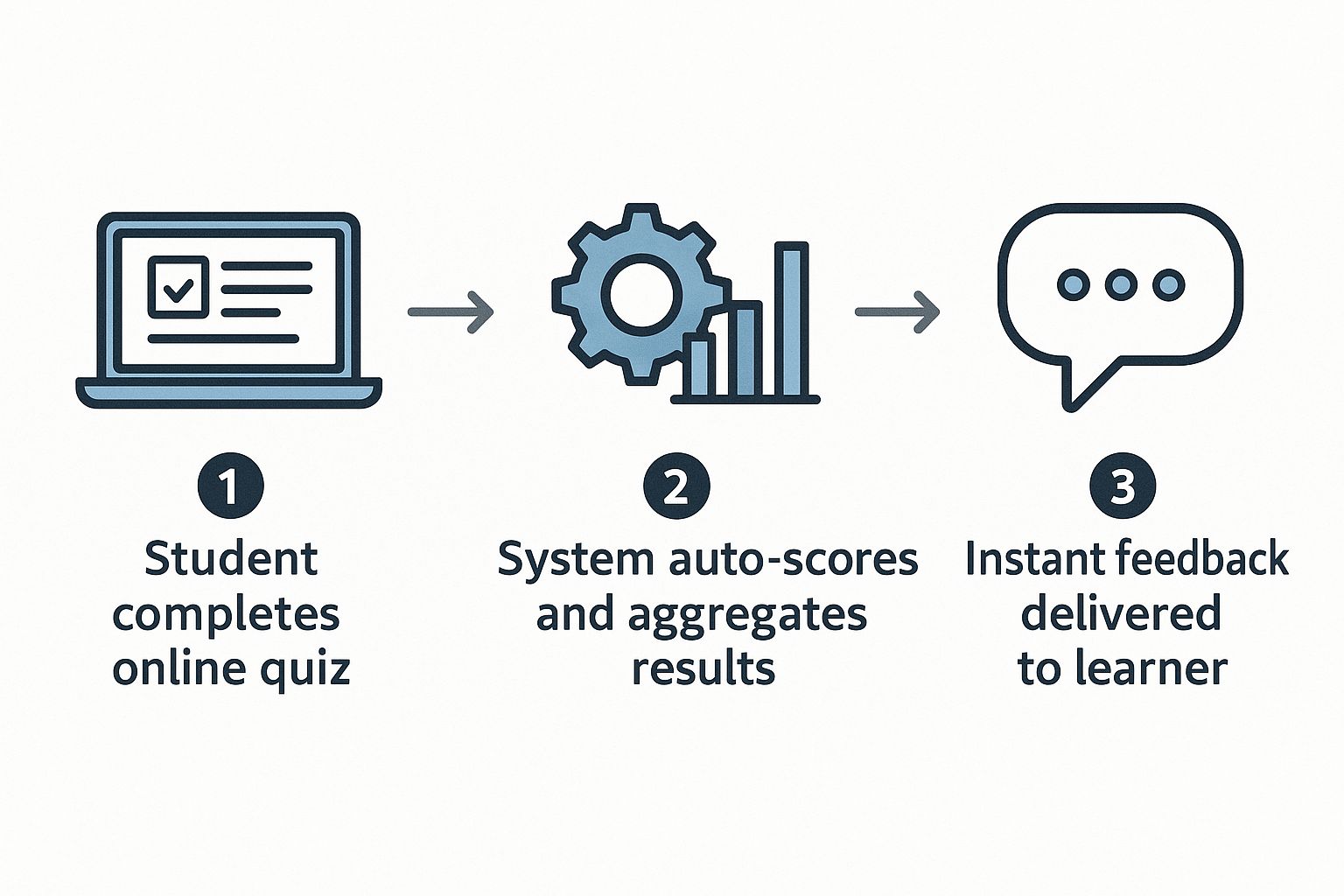

The whole process creates a seamless cycle of interaction, scoring, and immediate feedback, as you can see below.

This kind of instant redirection is what makes branching so powerful—it’s all enabled by automated feedback loops that happen in the moment.

Mapping a Practical Branching Scenario

Let's make this tangible. Think about a corporate compliance module on data privacy. The goal isn't just for employees to watch it, but to prove they understand the company's data handling policies inside and out.

You could start with a core video segment that explains the difference between Personal Identifiable Information (PII) and non-sensitive data. Right at the end, you pop in a multiple-choice question:

- Question: An internal report contains a list of customer email addresses and their associated order numbers. Is this report considered to contain PII?

Here’s where the branching comes in, creating two totally distinct paths based on their answer:

- Correct Answer (Yes): Perfect. The viewer has demonstrated they get it. They're automatically sent to the next segment, which might cover more advanced topics like data encryption standards. No time wasted.

- Incorrect Answer (No): This is where the magic happens. Instead of just seeing a "Wrong!" message, the viewer is branched to a short, 30-second remedial video. This clip could feature a data expert explaining exactly why an email address is PII, offering another clear-cut example. After that, they’re looped back to the original question to try again.

This simple structure builds an incredibly powerful feedback loop. You're not just marking an answer wrong; you’re providing immediate, targeted support to fix a misunderstanding before it takes root.

The real value of branching is that it makes assessment an active part of the teaching process. It’s no longer just a final judgment but a tool for guiding and reinforcing knowledge right when the learner needs it most.

The Strategic Value of Adaptive Paths

This way of assessing learning offers so much more than simple pass/fail metrics. It provides a safety net for those who need it, building their confidence and ensuring they meet the required competency level. This is absolutely critical for mandatory training where a complete and accurate understanding is non-negotiable.

Let's face it, many learners don't know what they don't know. Research consistently shows that self-regulation is a huge challenge. Branching logic helps solve this by providing the structure and support they need, guiding them based on their demonstrated knowledge.

By tailoring the journey, you make the entire learning process more efficient and, more importantly, vastly more effective.

How to Analyze Data and Measure Learning Outcomes

An interactive assessment is only as good as the data you get from it. Honestly, just knowing who passed or failed a quiz doesn't tell you a whole lot. To really understand if your training is working, you need to roll up your sleeves and dive into the analytics.

This is where you shift from just testing knowledge to actually measuring and understanding it. Detailed reports are your roadmap, showing you exactly which concepts your audience is struggling with and which ones are landing perfectly.

Digging into Question-Level Analytics

The most powerful and immediately useful data lives at the individual question level. Forget the overall score for a minute and break it down.

What if 85% of your learners get question three wrong? That’s not a learner problem—it’s a content problem. Right there, that single data point tells you the concept you explained right before that question is unclear. Now you can go back and fine-tune that specific segment, maybe by adding a better example or clarifying the explanation.

Even the wrong answers tell a story. When you see learners in a multiple-choice question consistently picking the same incorrect option, you’ve just uncovered a common misunderstanding. That's gold. You can now address that specific misconception head-on in future training.

Interpreting Viewer Engagement and Heatmaps

Beyond right or wrong answers, your viewers’ behavior offers another rich layer of insight. This is where things get really interesting. Mindstamp’s analytics include viewer heatmaps, which give you a visual snapshot of which parts of your video are being watched, re-watched, or skipped entirely.

- High Re-watch Rates: If you notice a section that viewers are constantly rewinding to watch again, pay attention. This is where your most critical—or most confusing—information lies.

- Significant Drop-offs: See a mass exodus at a certain point? Time to investigate. Was the content too dense? Was it boring or irrelevant? This helps you tighten up your video length and pacing.

- Interaction Data: Seeing who clicked on optional hotspots or explored different branches gives you a clear picture of who your most motivated and curious learners are.

This granular data is what makes modern assessment so powerful. You’re not just getting a final score; you're getting a detailed behavioral analysis of the entire learning journey. This allows for precise, targeted feedback and continuous improvement.

This kind of deep analysis is similar to what happens on a global scale. The Programme for International Student Assessment (PISA), for example, has been a key benchmark since 2000. It evaluates the skills of 15-year-old students across 81 countries (as of its 2022 cycle) in areas like reading, math, and creative thinking. The goal is the same: to gain deep insights into educational effectiveness to drive improvements.

Turning Insights into Action

So, what do you do with all this data? The real magic of using interactive video for training or educational content is how easy it is to act on these insights and make your content better over time.

Based on your analysis, you could:

- Rewrite confusing questions to make sure they’re truly testing comprehension.

- Add remedial branching paths for tough concepts where you see high failure rates.

- Create short follow-up videos that directly address the common pain points you spotted in the heatmaps.

This data-driven approach ensures your training materials don't just sit on a shelf—they evolve and get sharper with every view. If you’re looking to push this even further, exploring AI tools for educational assessment can open up new ways to streamline how you measure and act on learning data.

When you start swapping out traditional learning tools for interactive video, it’s only natural for a few questions to pop up. It’s a completely different way to think about content and assessment, so let's dig into some of the most common things we hear from teams making the shift.

This isn't just about plugging in new tech. It’s about fine-tuning your entire training strategy to get far more meaningful results. Getting a handle on these details is what makes the difference between a clunky rollout and a truly successful one.

How Is This Different from a Standard LMS Quiz?

I get this one a lot. While both can check for knowledge, an interactive video assessment weaves the questions right into the learning material itself. This gives the questions vital context and allows for immediate feedback. You're not just measuring recall; you're measuring comprehension and application in the moment. It makes the whole experience feel like part of the lesson, not a dreaded final exam tacked on at the end.

A typical LMS quiz is a separate event, happening after the learning is over and focusing almost entirely on what someone can remember. With interactive video analytics, you also get much richer insights. We're talking view heatmaps and drop-off points—data a standard quiz just can't provide.

The real game-changer is context. Interactive video assesses understanding at the exact point of instruction, reinforcing key concepts in real-time. A separate quiz can only tell you what was remembered after the fact.

Can This Actually Work for Formal Compliance Training?

Absolutely. In fact, interactive video is a powerhouse for compliance and certification training. You can set up your video to require viewers to answer critical questions correctly before they’re even allowed to move on. This ensures they've truly absorbed the essential, non-negotiable information.

Plus, features like individual completion tracking and detailed, question-level analytics give you a clear, auditable trail. You’ll have a rock-solid record of who completed the training and, more importantly, proved they understood it. It's a robust tool for any kind of mandatory learning program.

What’s the Best Way to Get Started?

If you're just dipping your toes into interactive video, my best advice is to start small. Focus on one, single learning objective. Please don't try to build a massive, branching epic for your first project. You'll just get overwhelmed.

Here’s a simple game plan to get you going:

- Pick a short video. Grab an existing 2-5 minute clip that explains one core concept. Keep it simple.

- Add just a few questions. Sprinkle in 2-3 simple multiple-choice questions at key moments to check for understanding.

- Dive into the data. Your initial goal is just to see how people respond and get comfortable with the platform's analytics.

Once you see the engagement skyrocket and get a taste of the rich data that comes back, you'll feel much more confident tackling more complex assessments. For a deeper dive, check out our guide on 10 effective learning assessment strategies for 2025.

Ready to see how easily you can transform your videos into powerful assessment tools? Mindstamp makes it simple to add questions, hotspots, and branching logic to any video, giving you the data you need to truly measure learning. Start your free trial today and build your first interactive assessment in minutes.

Get Started Now

Mindstamp is easy to use, incredibly capable, and supported by an amazing team. Join us!

Try Mindstamp Free